- Sklearn Algorithm Cheat Sheet

- Sklearn Cheat Sheet Pdf

- Sklearn Cheat Sheet

- Sklearn Model Cheat Sheet

- Sklearn Cheat Sheet Pdf

Do you need a little help learning Scikit-Learn in Python? Or maybe you just finding it hard to remember all the different commands to perform different operations? All of those formulas can be confusing and hard to remember. Have no fear!! I have put together 10 of the Best Python Scikit-Learn cheat sheets for you to print and hand next to all your other cheat sheets on the wall above you desk. Take a little time each day to review your cheat sheets and you will have it down in no time!

Scikit Learn Cheat Sheet Scikit-learn is a free software machine learning library for the Python programming language. It features various classification, regression and clustering algorithms including support vector machines is a simple and efficient tools for data mining and data analysis. Scikit-Learn, or sklearn, is a machine learning library for Python that has a K-Means algorithm implementation that can be used instead of creating one from scratch. To use it: Import the KMeans method from the sklearn.cluster library to build a model with nclusters. Fit the model to the data samples using.fit. Predict the cluster that each data sample belongs to using.predict. Keras is an easy-to-use and powerful library for Theano and TensorFlow that provides a high-level neural networks API to develop and evaluate deep learning models. We recently launched one of the first online interactive deep learning course using Keras 2.0, called 'Deep Learning in Python'.

Cheat Sheet 1: DataCamp

This Scikit-Learn cheat sheet from DataCamp will kick start your data science project by introducing you to the basic concepts of machine learning algorithms successfully. This cheat sheet is for those who have already started to learn Python packages and for those who would like to take a quick look to get a first idea of the basics for total beginners!

Scikit-learn algorithm cheat-sheet svc Ensemble Classifiers Naive Bayes NOT kernel approximation KNeighbors Classifier START regression NOT WORKING OOK samples sa mples.

Pros: This cheat sheet is rated ‘E’ for everyone!! Information is sectioned in blocks for easier reading

Cons: The bright red can be distracting to some

Cheat Sheet 2: Edureka.co

This Scikit-Learn cheat sheet is done in cool blues than its red cousin above. The information is broken down into blocks to making it easier to digest. This cheat sheet will show you the basics through examples so you can learn to preprocess your data for your projects.

Pros: Rated ‘E’ for everyone!! Information is easily digestible.

Cons: none that I can see.

Cheat Sheet 3: Intellipaat

In collaboration with IBM, Intellipaat has gone one step further with this cheat sheet by providing not only headers in the blocks so you know what you are doing but also in what part of the process you are at! Pre- and Post-processing your data model, with all the steps for you in one handy reference.

Pros: Rated ‘E’ for everyone. It has blocks with steps inside so you don’t forget what commands are used in Pre/PostProcessing, Working the model and evaluating the performance.

Cons: none that I can see.

Cheat Sheet 4: Cheatography

This cheat sheet is great for those who are only needing a quick reference for the definitions of scikit-learn expressions. The sheet is pretty spartan compared to the others in examples but also goes into more depth than the others on definitions. I would not suggest this particular cheat sheet to a total beginner in data science or in Scikit-Learn. I would rate this sheet at ‘I’ for the Intermediate learner.

Pros: Great on definitions on multiple expression types in Scikit-Learn.

Cons: Too spartan for beginners, green background can be distracting.

Cheat Sheet 5: Codecademy

This sheet is also intended for the Intermediate learner of Scikit-Learn. Showing examples for Linear Regressions, Naïve Bayes, k-nearest neighbors, K means, validating the model and Training and test sets, you would best already knowing what the definition of the above expressions are and what they can do. This handy reference is nice to have near if you just need to remember how to write your expression.

Pros: Handy for the Intermediate learner, comes with code examples

Cons: Not for beginners.

Cheat Sheet 6: becominghuman.ai

Here on becominghuman.ai, cheat sheets show not only definitions, but also flow charts to help you check documentation and which estimator is the right one for the job, which can be difficult to do. This cheat sheet is for the Intermediate learner

Pros: Great for Intermediate learners, in-depth definitions on expressions

Cons: Spartan

Cheat Sheet 7: Scikit-learn.org

This cheat sheet shows you the mapping processes of machine learning thru mapping out what each classification, clustering, regression and dimensionality reduction It is a great map to help show you how the expressions are interconnected.

Pros: Great visual

Cons: Not suggested for beginners

Cheat Sheet 8: Enthought.com

These pdfs are a combination of 3 actually, but each one goes into depth of Classification, Clustering and Regression. This set of 3 are perfect for a complete beginner as it gives you not only definition and code, but also tips, when to use it and how it works!! Enthought made sure to cover everything for you, so don’t worry if you forget or need a refresher on how it all works!

Pros: Rated ‘E’ for everyone!! Goes in depth for the total beginner

Cons: Can be a lengthy read

Cheat Sheet 9: Elite Data Science

This cheat sheet is put together beautifully showing you a step by step process on how to use scikit-learn to build and tune a supervised data model on your own!! One con is that it does not show any examples on how the expressions are used.

Pros: Nicely put together for easy readability.

Cons: For the Intermediate learner.

Cheat Sheet 10: Lauren Glass

This last sheet is generously provided by an Instagram Data Engineer!! Lauren Glass has put together a comprehensive cheat sheet for scikit learn and has made it easy for beginners to understand!! She goes in depth on all the sections and provides definitions for each.

Pros: Easy to read and understand

Cons: None I can see

Related Articles:

Thanks for joining me once again!! I hope you find these cheat sheets on Scikit-Learn useful and tape them to your wall above your desk to keep them handy!! I will keep you updated on the best cheat sheets for Python and related subjects!!

Sklearn Algorithm Cheat Sheet

Related Posts

By Andre Ye, Cofounder at Critiq, Editor & Top Writer at Medium.

Source: Pixabay.

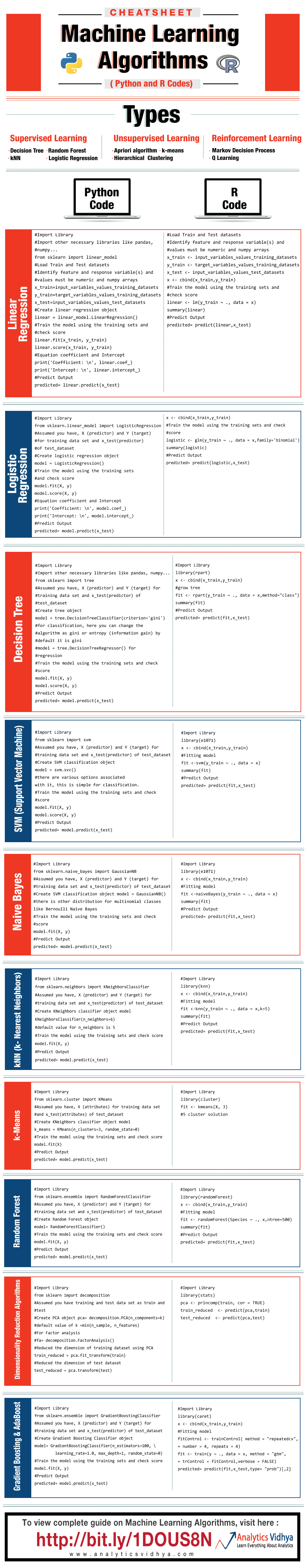

There are several areas of data mining and machine learning that will be covered in this cheat-sheet:

- Predictive Modelling. Regression and classification algorithms for supervised learning (prediction), metrics for evaluating model performance.

- Methods to group data without a label into clusters: K-Means, selecting cluster numbers based objective metrics.

- Dimensionality Reduction. Methods to reduce the dimensionality of data and attributes of those methods: PCA and LDA.

- Feature Importance. Methods to find the most important feature in a dataset: permutation importance, SHAP values, Partial Dependence Plots.

- Data Transformation. Methods to transform the data for greater predictive power, for easier analysis, or to uncover hidden relationships and patterns: standardization, normalization, box-cox transformations.

All images were created by the author unless explicitly stated otherwise.

Predictive Modelling

Train-test-split is an important part of testing how well a model performs by training it on designated training data and testing it on designated testing data. This way, the model’s ability to generalize to new data can be measured. In sklearn, both lists, pandas DataFrames, or NumPy arrays are accepted in X and y parameters.

Training a standard supervised learning model takes the form of an import, the creation of an instance, and the fitting of the model.

sklearnclassifier models are listed below, with the branch highlighted in blue and the model name in orange.

sklearnregressor models are listed below, with the branch highlighted in blue and the model name in orange.

Evaluating model performance is done with train-test data in this form:

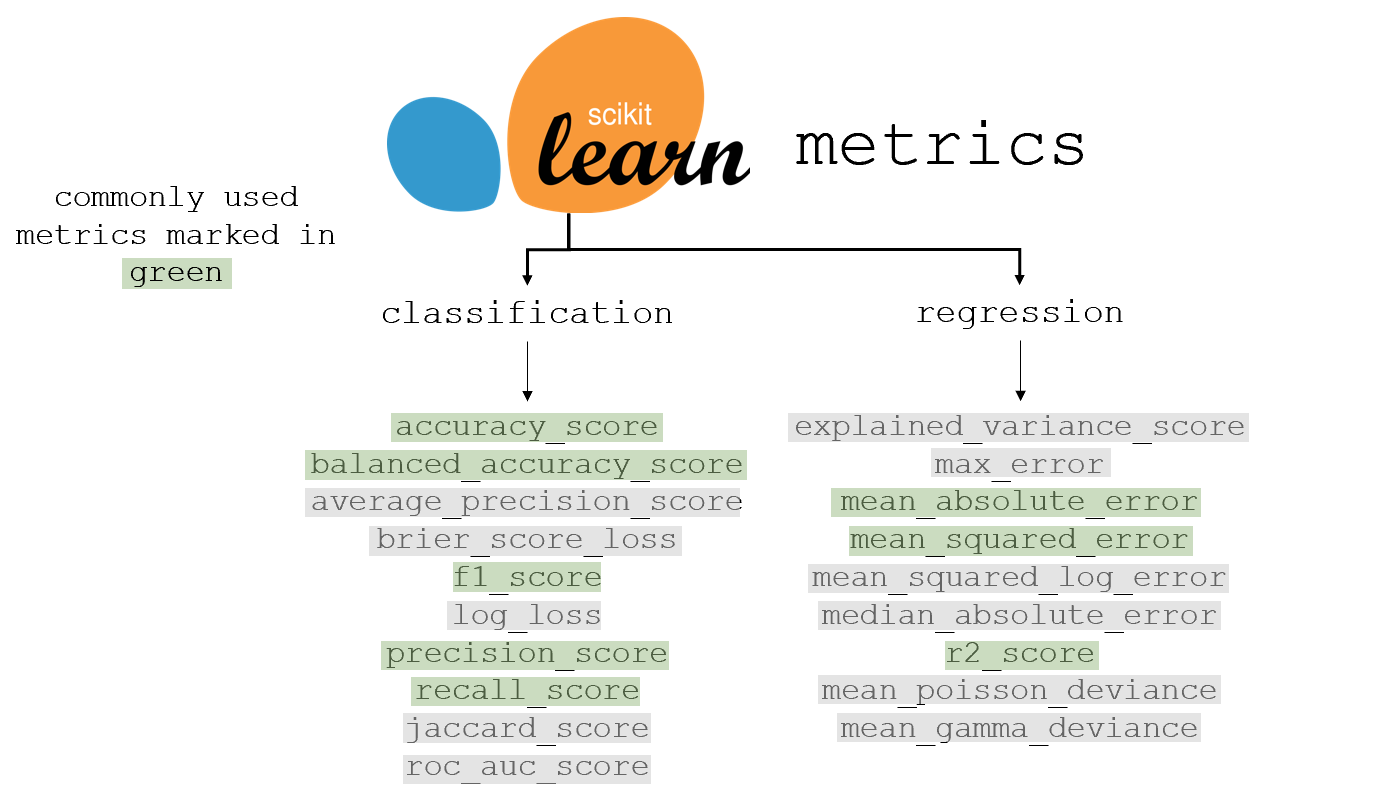

sklearnmetrics for classification and regression are listed below, with the most commonly used metric marked in green. Many of the grey metrics are more appropriate than the green-marked ones in certain contexts. Each has its own advantages and disadvantages, balancing priority comparisons, interpretability, and other factors.

Clustering

Before clustering, the data needs to be standardized (information for this can be found in the Data Transformation section). Clustering is the process of creating clusters based on point distances.

Source. Image free to share.

Training and creating a K-Means clustering model creates a model that can cluster and retrieve information about the clustered data.

Accessing the labels of each of the data points in the data can be done with:

Similarly, the label of each data point can be stored in a column of the data with:

Accessing the cluster label of new data can be done with the following command. The new_data can be in the form of an array, a list, or a DataFrame.

Accessing the cluster centers of each cluster is returned in the form of a two-dimensional array with:

To find the optimal number of clusters, use the silhouette score, which is a metric of how well a certain number of clusters fits the data. For each number of clusters within a predefined range, a K-Means clustering algorithm is trained, and its silhouette score is saved to a list (scores). data is the x that the model is trained on.

After the scores are saved to the list scores, they can be graphed out or computationally searched for to find the highest one.

Dimensionality Reduction

Dimensionality reduction is the process of expressing high-dimensional data in a reduced number of dimensions such that each one contains the most amount of information. Dimensionality reduction may be used for visualization of high-dimensional data or to speed up machine learning models by removing low-information or correlated features.

Principal Component Analysis, or PCA, is a popular method of reducing the dimensionality of data by drawing several orthogonal (perpendicular) vectors in the feature space to represent the reduced number of dimensions. The variable number represents the number of dimensions the reduced data will have. In the case of visualization, for example, it would be two dimensions.

Visual demonstration of how PCA works. Source.

Fitting the PCA Model: The .fit_transform function automatically fits the model to the data and transforms it into a reduced number of dimensions.

Explained Variance Ratio: Calling model.explained_variance_ratio_ will yield a list where each item corresponds to that dimension’s “explained variance ratio,” which essentially means the percent of the information in the original data represented by that dimension. The sum of the explained variance ratios is the total percent of information retained in the reduced dimensionality data.

PCA Feature Weights: In PCA, each newly creates feature is a linear combination of the former data’s features. Theselinear weights can be accessed with model.components_, and are a good indicator for feature importance (a higher linear weight indicates more information represented in that feature).

Linear Discriminant Analysis (LDA, not to be commonly confused with Latent Dirichlet Allocation) is another method of dimensionality reduction. The primary difference between LDA and PCA is that LDA is a supervised algorithm, meaning it takes into account both x and y. Principal Component Analysis only considers x and is hence an unsupervised algorithm.

PCA attempts to maintain the structure (variance) of the data purely based on distances between points, whereas LDA prioritizes clean separation of classes.

Feature Importance

Feature Importance is the process of finding the most important feature to a target. Through PCA, the feature that contains the most information can be found, but feature importance concerns a feature’s impact on the target. A change in an ‘important’ feature will have a large effect on the y-variable, whereas a change in an ‘unimportant’ feature will have little to no effect on the y-variable.

Permutation Importance is a method to evaluate how important a feature is. Several models are trained, each missing one column. The corresponding decrease in model accuracy as a result of the lack of data represents how important the column is to a model’s predictive power. The eli5 library is used for Permutation Importance.

In the data that this Permutation Importance model was trained on, the column lat has the largest impact on the target variable (in this case, the house price). Permutation Importance is the best feature to use when deciding which to remove (correlated or redundant features that actually confuse the model, marked by negative permutation importance values) in models for best predictive performance.

SHAP is another method of evaluating feature importance, borrowing from game theory principles in Blackjack to estimate how much value a player can contribute. Unlike permutation importance, SHapley Addative ExPlanations use a more formulaic and calculation-based method towards evaluating feature importance. SHAP requires a tree-based model (Decision Tree, Random Forest) and accommodates both regression and classification.

PD(P) Plots, or partial dependence plots, are a staple in data mining and analysis, showing how certain values of one feature influence a change in the target variable. Imports required include pdpbox for the dependence plots and matplotlib to display the plots.

Isolated PDPs: the following code displays the partial dependence plot, where feat_name is the feature within X that will be isolated and compared to the target variable. The second line of code saves the data, whereas the third constructs the canvas to display the plot.

The partial dependence plot shows the effect of certain values and changes in the number of square feet of living space on the price of a house. Shaded areas represent confidence intervals.

Contour PDPs: Partial dependence plots can also take the form of contour plots, which compare not one isolated variable but the relationship between two isolated variables. The two features that are to be compared are stored in a variable compared_features.

The relationship between the two features shows the corresponding price when only considering these two features. Partial dependence plots are chock-full of data analysis and findings, but be conscious of large confidence intervals.

Data Transformation

Standardizing or scaling is the process of ‘reshaping’ the data such that it contains the same information but has a mean of 0 and a variance of 1. By scaling the data, the mathematical nature of algorithms can usually handle data better.

The transformed_data is standardized and can be used for many distance-based algorithms such as Support Vector Machine and K-Nearest Neighbors. The results of algorithms that use standardized data need to be ‘de-standardized’ so they can be properly interpreted. .inverse_transform() can be used to perform the opposite of standard transforms.

Normalizing data puts it on a 0 to 1 scale, something that, similar to standardized data, makes the data mathematically easier to use for the model.

While normalizing doesn’t transform the shape of the data as standardizing does, it restricts the boundaries of the data. Whether to normalize or standardize data depends on the algorithm and the context.

Box-cox transformations involve raising the data to various powers to transform it. Box-cox transformations can normalize data, make it more linear, or decrease the complexity. These transformations don’t only involve raising the data to powers but also fractional powers (square rooting) and logarithms.

Sklearn Cheat Sheet Pdf

For instance, consider data points situated along the function g(x). By applying the logarithm box-cox transformation, the data can be easily modelled with linear regression.

Sklearn Cheat Sheet

Created with Desmos.

Sklearn Model Cheat Sheet

sklearn automatically determines the best series of box-cox transformations to apply to the data to make it better resemble a normal distribution.

Because of the nature of box-cox transformation square-rooting, box-cox transformed data must be strictly positive (normalizing the data beforehand can take care of this). For data with negative data points as well as positive ones, set method = ‘yeo-johnson’ for a similar approach to making the data more closely resemble a bell curve.

Sklearn Cheat Sheet Pdf

Original. Reposted with permission.

Related: